This is the second part of a two-part series on developmental evaluation and collective impact. Read part 1.

In Part 1, Kathleen Holmes with the Missouri Foundation for Health explained why developmental evaluation is an important part of the Foundation’s infant mortality initiative. She also shared some of the first steps the Foundation, backbone organizations and the evaluators took together to build a shared understanding of developmental evaluation and its role in helping develop a nascent collective impact approach.

She also shared that there were evaluation questions generated by the backbones as a result of breaking down the issue of infant mortality into the components that are complex, complicated, and simple. The developmental evaluation team (Spark Policy Institute and the Center for Evaluation Innovation) worked together with the backbone organizations to answer the questions, two of which are explored here.

Exploring Evaluation Question 1: Surfacing Underlying Dynamics

Two backbone organizations working together in one collective impact effort asked the question:

- How do potential partners (including within the backbone organizations) view and prioritize infant mortality, including such things as their values, views about causes of infant mortality, needs and barriers, and experiences working on infant mortality.

To answer the question, a rapid fire data collection process was used, going from asking the question to sharing results in under a month, over Christmas too! Staff in both organizations completed a short ten question survey that explored their beliefs about the drivers of infant mortality, potential solutions, the roles their organizations could take on, how the two organizations could work together, and what the future of this work could be.

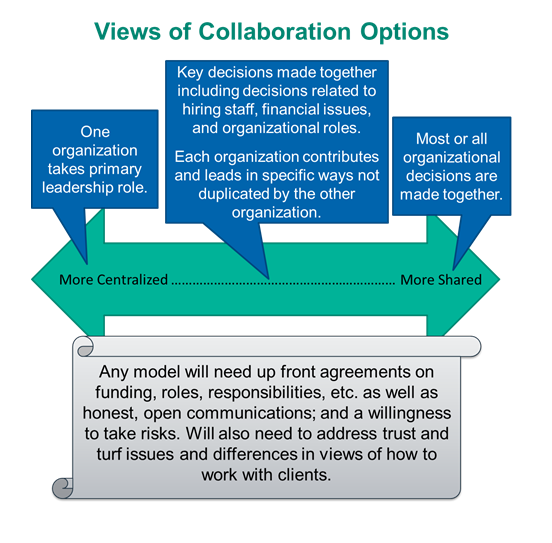

Next, key staff from both organizations worked with the evaluation team to interpret the results of the survey and plan how to present them back to the rest of the staff. The participants identified visual methods for sharing the information back – how to diagram it and make it easy to relate to [insert Figure 1]. The results were shared by these key staff to the rest of the backbone staff at a meeting where both organizations came together to talk about and make decisions on how they wanted to work together. The evaluator wasn’t even present – the information was owned by the staff.

The dialogue at that meeting and the months to follow highlighted significant challenges in working together. While full collaboration was desired by both organizations and was the decision they made, implementing the shared decision-making proved challenging. In June, the developmental evaluator engaged again with the staff to work on developing an approach for shared decision-making that they can experiment with and refine.

In this process, the backbone organizations, Foundation and the evaluator learned together about the relationships, surfacing underlying dynamics and addressing barriers to trust. This is a classic use of developmental evaluation – bringing issues into the dialogue that are already present, but not being discussed or actively addressed.

Exploring Evaluation Question 2: Engaging external stakeholders

Another backbone organization in a different community was struggling with the issue of how to best engage stakeholders. They recognized that their environment had an overabundance of collective impact and other collaborative efforts and gaining the attention of diverse stakeholders was going to be difficult. That led them to ask:

- What is a process and structure for engaging stakeholders – how to stage the engagement and how to motivate participation?

The developmental evaluation’s first step was a facilitated meeting to explore the current vision for how to engage stakeholders, from the messages being planned to the messengers, delivery mechanisms and the design of the planning process that would be conducted with the stakeholders.

After refining the ideas, the evaluation’s next step was to help the backbone test out their plan before they put it into action. In other words, rather than waiting until everything was underway to discover it wasn’t quite the right approach, the evaluation sought to identify ways to improve and refine the approach before implementation.

The developmental evaluators contacted key stakeholders identified by the backbone and conducted short interviews. The interviews included a component of message testing, both a generic message relevant to any stakeholder and a customized message relevant to the interest the stakeholder represented. Then the interview explored the viability of the planning process, including seeking to surface any potential challenges the process might experience along the way.

The findings were shared back with the backbone organization in time for their meeting with a leadership group to develop key messages and their plan for action. Some of the findings supported their current messages, but other findings suggested the sector specific messages weren’t accomplishing what they hoped. For example, interviewees reported that the language in their housing sector-specific message did effectively connect the dots between housing, transportation and infant mortality, but also made the scope feel huge and daunting, which could decrease participation.

The information helped them think about how to better message and engage stakeholders across many sectors. The backbone staff highlighted how they have been able to use the information to adapt and its fundamental role in helping design their strategy. This is another common use of developmental evaluation – making the road ahead feel less uncertain and helping surface the pros and cons of different options.

Lessons Learned:

The developmental evaluation team has played an important role in helping the backbone organizations to explore how to make their collaboratives function and best do their work. They continue to explore other types of data collection that may be needed, from systems mapping to developing and testing theories of change. This process has highlighted a number of lessons learned about the application of developmental evaluation in a collective impact setting:

- Early in a collective impact effort, there are so many moving parts and such a high level of uncertainty that developmental evaluation is both critical, but also difficult to prioritize. Moving quickly to a practical, useful result from the evaluation is necessary to build the level of priority.

- The developmental evaluators toolbox of research and facilitation approaches needs to be significant and varied. Sometimes a key informant interview or focus group will do, but often things like emergent learning, systems mapping, premortems, intense period debriefs, and many other approaches can be invaluable in this type of process.

- The best developmental evaluator for the job might not be local, but there are some negative consequences to engaging someone from a distance. While relationships can be built from afar and trust developed through periodic in-person meetings, when the really tough issues come up (like racism as a driver of infant mortality and whether and how to address), an embedded local developmental evaluator who can be at all the meetings and have in-person one-on-ones might be better positioned to help work through the issue.

Figure 1. Example of the results of evaluation question 1.